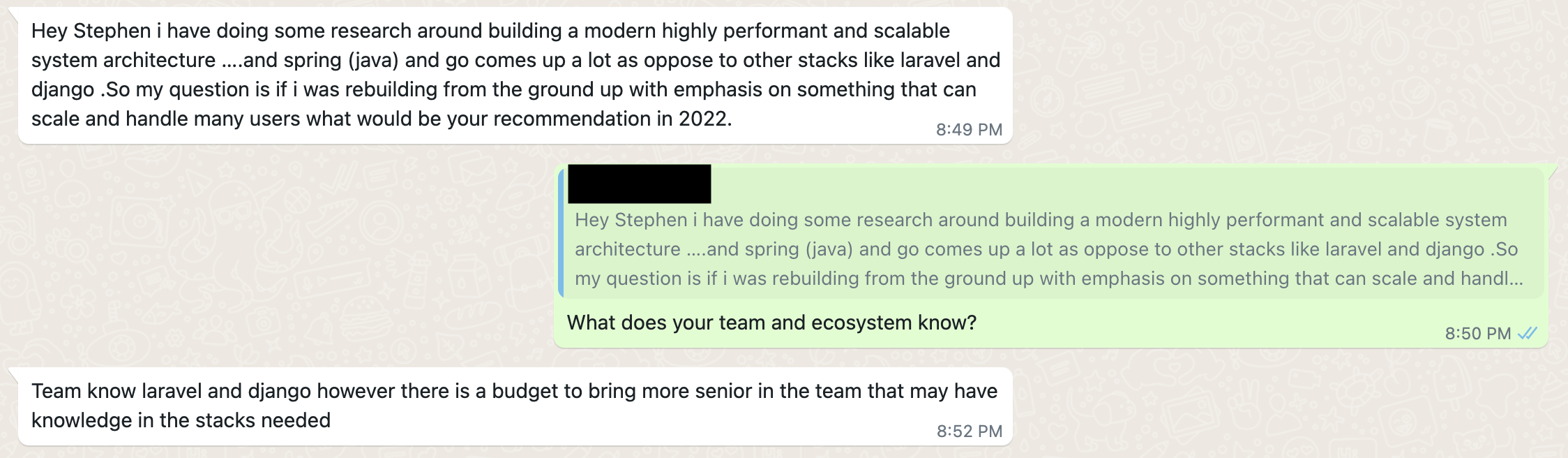

This post is a discussion with a colleague who reached out to me requesting for advice on whether to rebuild their successful e-commerce platform whose usage has grown exponentially over the last 18 months.

My first piece was 90% of platform rebuilds and re-architectures fail, especially since there are always unseen constraints in the new tooling that slows down the process. My approach is always to advice to squeeze as much as you can from your existing framework, language and platform before starting to look elsewhere

While this post is Laravel – specific, the ideas are applicable to any framework, programming language or even mix of technologies

As a Laravel developer who is passionate about architecture and solving scaling challenges, this is a series of questions to drive decision making

- Are you on the latest PHP 8.1 and Laravel 9?

- Uprading to the latest version of PHP and Laravel pulls in the latest performance improvements in the language stack

- This also applies to the webserver Apache/Nginx

- Is your database the latest applicable version

- Server hardware

- CPU latest generation of processors for the configuration

- OS patches – remove unused and unneeded services

- SSD disks for IO bound performance

- RAM – the more the better

- Network configuration – unnecessary round trips from the application to the database (see below too)

- Is your database and application well tuned? Indexes, reducing the size of requests and number of queries

- Application tuning is an art that can be taught – for a database heavy application like this case the Eloquent Performance Patterns by is a key resource (https://eloquent-course.reinink.ca/)

- This also involves removing unused plugins and libraries, and leveraging the framework best practices – Larvel Beyond Crud (https://spatie.be/products/laravel-beyond-crud) and Frontline PHP (https://spatie.be/products/front-line-php) from the team at Spatie are excellent primers

- The memory usage per request can easily be shown by the Laravel Debug Bar (https://github.com/barryvdh/laravel-debugbar) during development

- Checking the size of requests and responses may improve performance – send as little data as required for the operation, paginate in the database not the application

- Add indexes to improve table joins, redesign tables for needs, separate data collection & reporting (denormalization may be needed here)

- Use Specific API requests for cases where generic default CRUD ones do not perform well

- Is all that Javascript necessary in the application pages?

- Is all that that CSS necessary in the application pages?

- Is the database tuned for the hardware that it is running on?

- Are you using the framework tools like queues to offload processing into asynchronous operations via queues

- At times performance issues may be experienced by users especially in e-commerce setups where actions that can be asynchronous are added into a synchronous workflow slowing down responses to end users e.g., sending a confirmation email (adds 2-5 seconds to the order response) can be done after so the ordering process completes faster for the customer

- For faster throughput using extensions like Octane can provide the necessary performance boost

- Tweaking the web server Apache/Nginx to handle more users

- Load balancing across more than one webserver can provide quick wins

- Increasing your database resources – bigger server, tuning the MySQL

- Computing power is cheap thus a beefier server or more RAM may do the trick depending on whether the deadlocks are CPU/Disk or memory bound

- If a beefier server does not help, probably load balancing across multiple smaller servers even for stateful vs stateless requests could improve performance

- Concurrency using Laravel Vapor and Octane?

- Vapor is a paid service to bring serverless to the Laravel applications

- Octane increases the concurrency of request handling though application changes may be needed to cater for the constraints Octane places

- Are you profiling your application with tools like Blackfire or Sentry to find performance deadlocks?

- The performance improvement approach is measure, find the bottleneck, tweak to improve, then rinse and repeat

- Are there errors or failures that are causing the application to slow down

- Have you refactored and cleaned up your data model to match the current reality?

- As applications evolve there is a need to remove old code, data columns to suit the new reality

- Refactoring code to match reality removes any unncessary baggage that is carried along

- Is your architecture the simplest that it can be – https://future.a16z.com/software-development-building-for-99-developers/ as your organization may not need the complexity of a Fortune 500 or FAANG

- There are hidden gems which can also be leveraged to improve your architecture & unearth performance issues

- Test Driven Development – even if tests are written after the code, unit testing complex algorithms while carrying out end-to-end workflows of critical user paths

- Design for failure – especially for external services

- CI/CD – automate deployments to get new features, bug fixes and enhancements into production as fast as possible

- Setup staging sandboxes to test out ideas and tweaks

- Monitor your production application service health – Laravel Health (https://spatie.be/docs/laravel-health/v1/introduction) provides an excellent starting poing

When all else fails and a platform rebuild is necessary the Strangler Fig Pattern is a great way forward – replace different parts of the application as needed replacing them with newer architectural pieces referred Legacy Application Strangulation : Case Studies (https://paulhammant.com/2013/07/14/legacy-application-strangulation-case-studies/)and Strangler Fig Application (https://martinfowler.com/bliki/StranglerFigApplication.html)

What are you thoughts and suggestions? What has worked for you and what pit falls did you find? Any additional advice?